Transcript: Hybrid Cloud Platform and ‘as a Service’ Options Pt. 2

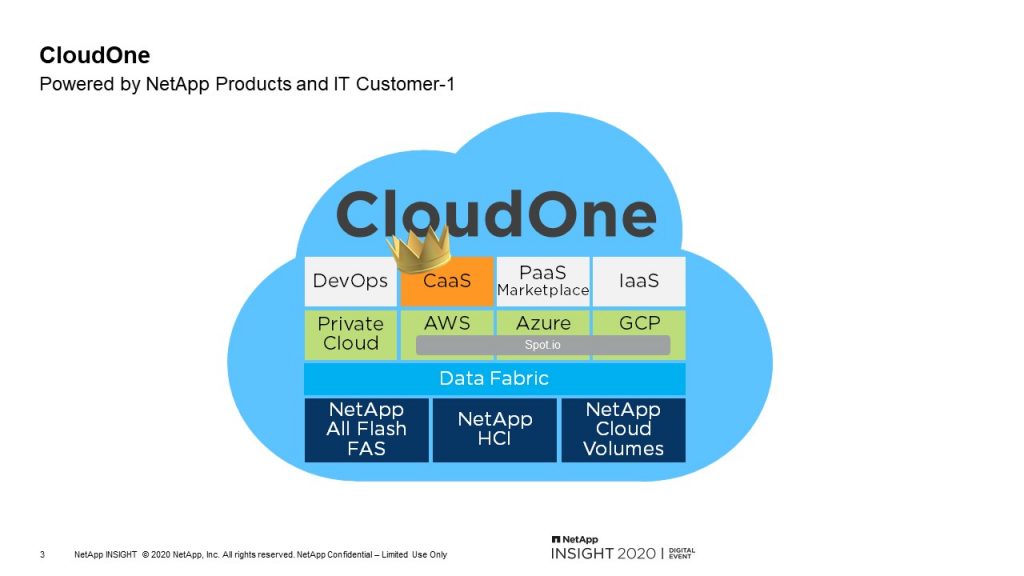

My name is Jonathan Fair, Senior DevOps Engineer part of the CloudOne team. I just wanted to go over a general understanding of CaaS. You heard some of it in Mike’s presentation just now. Why we created it and how our application teams are using it. We have a lot of different offerings that Mike just mentioned. He went over DevOps and IaaS, and I’ll be going over CaaS here a little bit more in depth.

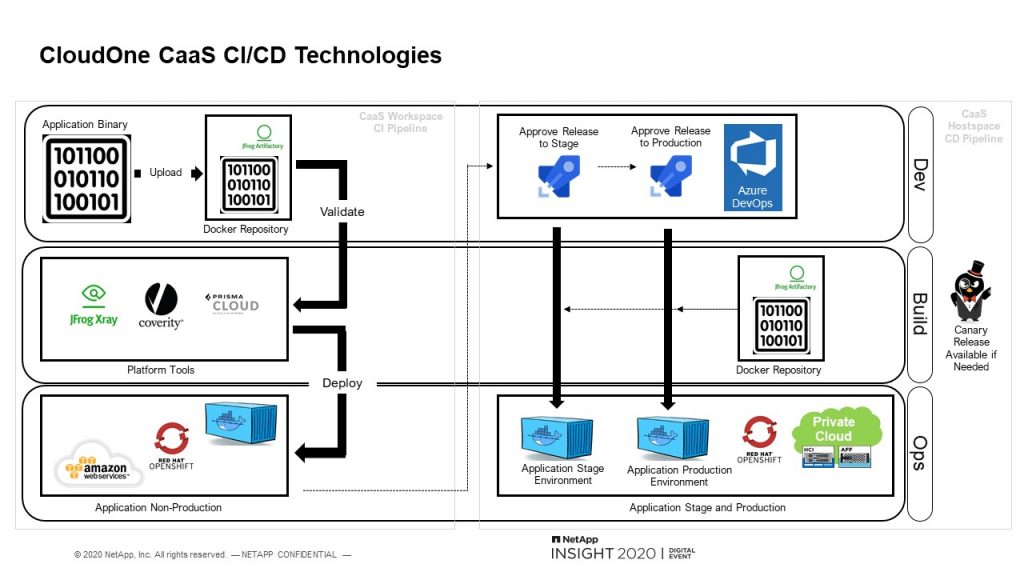

CaaS delivers a pre-defined template Helm Chart and end-to-end CI/CD pipeline that includes security scans and approvals. This allows developers to spend more time improving their application instead of maintaining it and troubleshooting the pipelines.

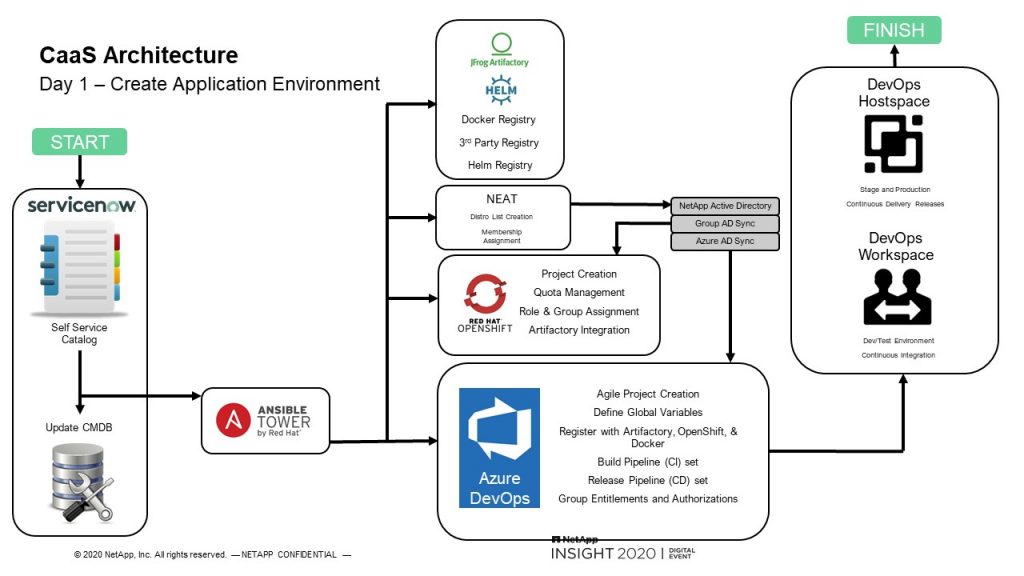

Why’d we build this? In the past, developers were responsible for developing their application. They’re usually not familiar with Kubernetes and security and everything else that may be involved. So they normally would start with a CI/CD pipeline. They need to learn and work with Azure pipelines, and several security integrations such as Jfrog Xray, Sonar Qube, Prisma Cloud, Coverity, Docker, Helm. You get the point. The list is pretty long of everything they’d need to learn. So what we did was we set out to automate this and provide it as a service. So I’ll show you now how our application teams get started using CaaS with the Day-1 automation.

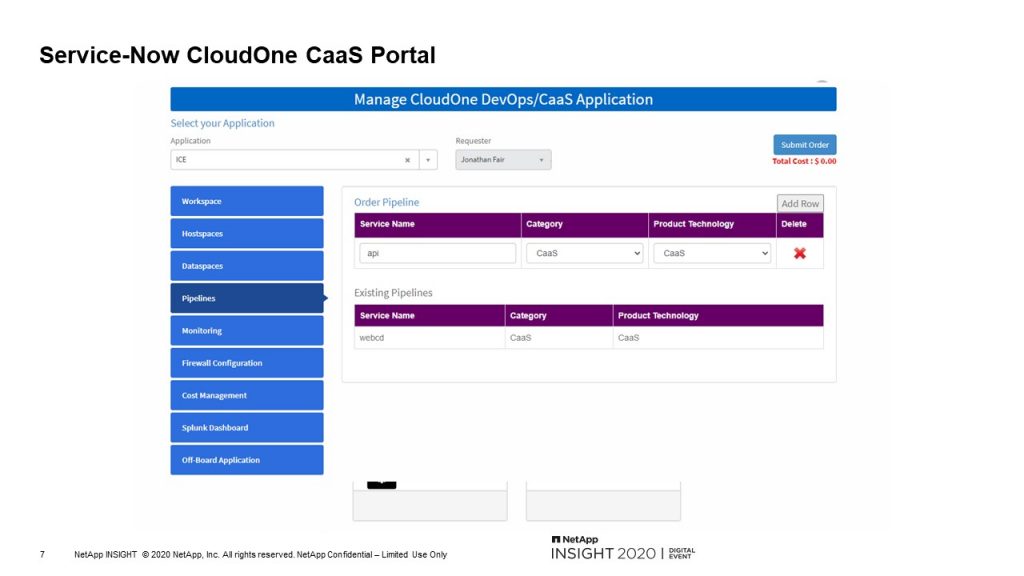

We have our ServiceNow portal. This is for the application owners to manage their CloudOne services. Here they would onboard their application with their tier and cost center. So we do cost tracking depending on what they order and what tier their application is. Then they order any hostspaces.

These are basically name spaces based on the landscape, production stage, t-shirt size and cluster region. The t-shirt size is just limiting, sets the quotas and limits within the Kubernetes project. Along with for PVC sizes, number of PVCs, CPUs, memory. Once they order that, they can order any databases using our CloudOne DevOps or database as a service offerings. And finally order the CaaS instances that they need. So if their application requires several images, they would order a CaaS pipeline for each image.

Once the user clicks submit, ServiceNow will create the CIs in our CMDB and kick off the playbooks in Ansible Tower. Our playbooks will create Docker repositories, active directory groups for developers, leads, DBAs and operations to manage access and perform approvals. It then creates OpenShift projects with roles assigned to those active directory groups and Azure DevOps project is created with repositories, service connections, variables groups and pipelines. And finally, the workspaces and hostspaces are created. You can think of the workspace as more of a namespace where the developers can spin up instances and bring them down quickly, where the hostspaces are stage and production environments for more in-depth testing.

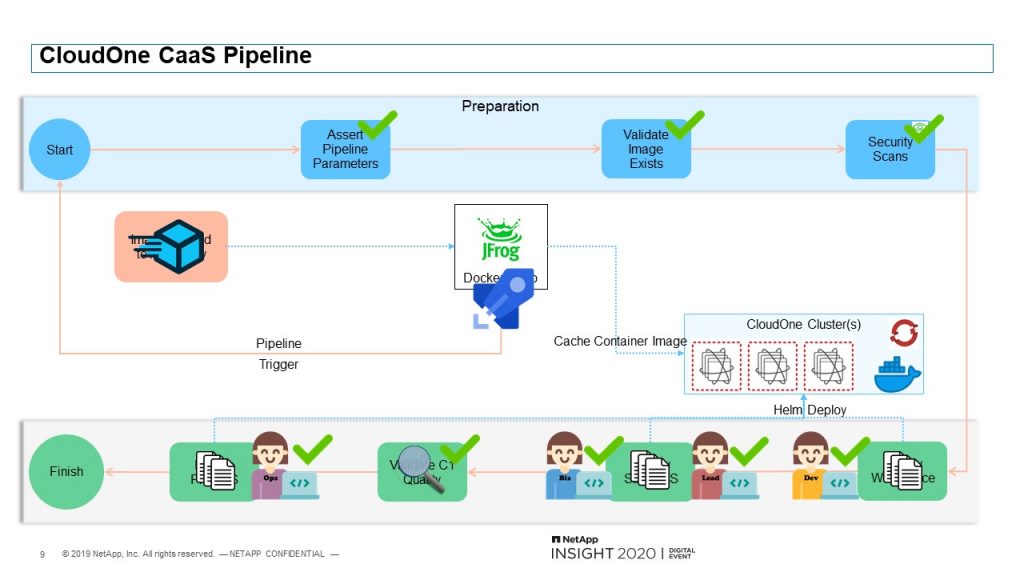

Once everything is provisioned on Day 1, the Day 2 automation begins. And this is the pipeline that we actually provide during the Day 1 automation. Developers can begin deploying their application using this pipeline. Once the developers make any necessary changes to the Helm chart template that we provide, they can push their image to our Jfrog artifactory. This will automatically trigger their CI/CD pipeline. The pipeline will make sure the provided parameters and Helm chart is valid and make sure the image exists in artifactory.

It’ll then perform both code and image security scans and provide the results to the developers. The pipeline will continue to the workspace even if there are vulnerabilities, that way the developers can fix and test any of those vulnerabilities in their development workspace. If the developer is not satisfied or not happy with the deployment, or needs to make some more changes before going onto the next stage, developers can halt the pipeline and start the process over after making the needed changes. If the developer is happy with the changes and approves it, the pipeline will remove the deployment from the workspace so the developer can begin working on the next feature. In the meantime, the pipeline will wait for the application leads to approve the deployment to the stage hostspace. Once approved, it will perform the deployment and allow the business to review the application changes in the stage environment.

Once the business approves this, it will check the security scans performed earlier and see if there are any major vulnerabilities. If there were any, it will halt the pipeline and require the developer to resolve those. If there are no vulnerabilities, it will go to the operations queue to allow them to check the logs in Splunk, perform any load testing, synthetic transaction monitoring or whatever they decide to do. Once operations approves, it will deploy to production. This entire process can take 15 to 20 minutes depending on how long it takes for the individual team members to go through the approvals. But generally, end-to-end can be 15 to 20 minutes.

So this is what the pipeline looks like in Azure DevOps. This is one example. And this example is with one stage hostspace and one production hostspace. The hostspaces can be defined very easily. The user in their repo can just pick which hostspaces they want to deploy to. So if they have one application that they want to deploy to two stage hostspaces and a specific production hostspace, they can easily define that. In this example, is has the workspace, one stage hostspace and one production hostspace. Currently, it’s deployed to the workspace and waiting for the developer to review and approve that. If the developer approves that, then it’ll go onto the stage hostspace.

CaaS has proven to us and our application teams that automation can significantly reduce manhours spent on setup, day to day operations and troubleshooting. It provides a reliable application while reducing our compute footprint. CaaS allows our developers to do what they do best, which is develop their application. It provides enterprise level security to all applications without the need for monthly OS patching downtimes and routine manual security audits. So this is our DevSecOps service for image and containers.